Judging predictions of rainfall

The overheard conversation from the other day suggests a curiosity towards a supremely important aspect of statistical predictions -- that of validating models.

In essence, the men on the street were using the outcome (rain drops) to lay down a judgment on the weather forecasters.

How should one use statistical thinking to deal with this problem?

***

Forecasters issue probabilities e.g. 20 percent chance of raining today. At the end of each day, the one-day-ahead forecast from the prior day could be evaluated. Either it rained or it did not rain. While the forecast is in probability, the outcome is a binary yes/no. And this is why validation is not straightforward. As the men of the street showed, the one day's worth of data (one Yes or one No) does not provide sufficient evidence to judge the accuracy of the forecast.

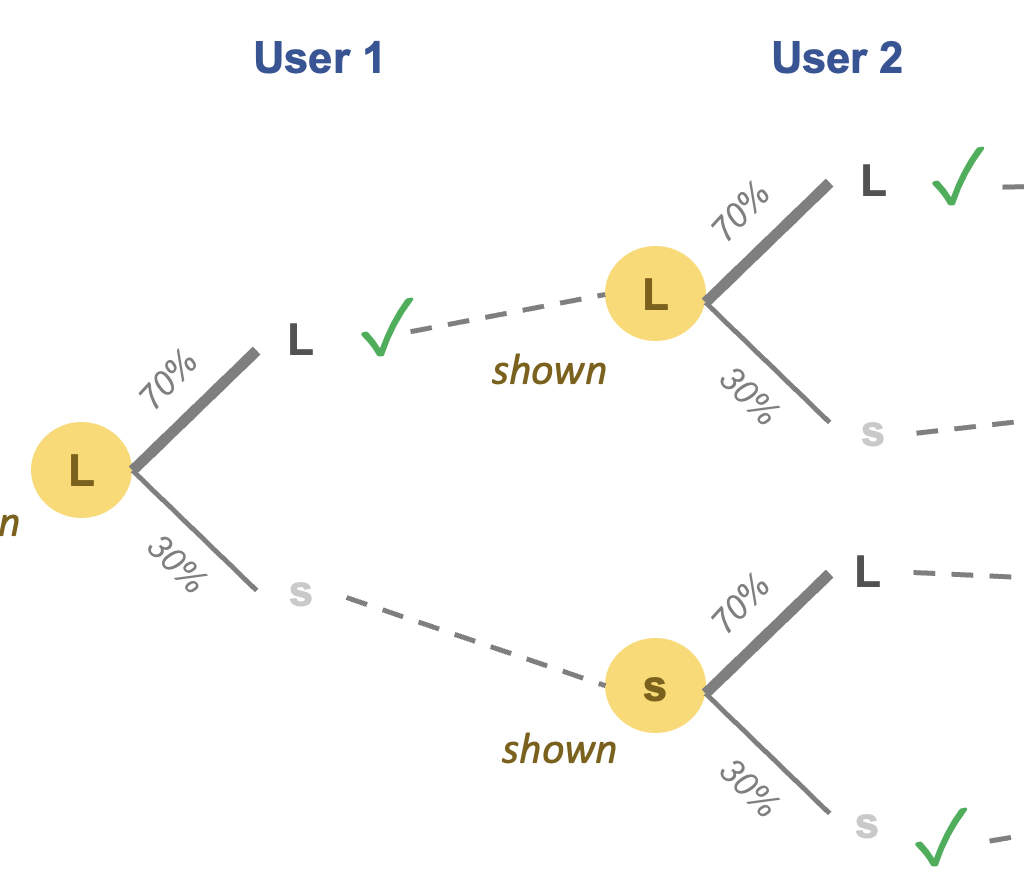

When a statistician predicts 20 percent chance of something happening, she is imagining alternative universes. As she exists today, she imagines all possible tomorrows; in one universe, tomorrow would bring 1 inch of rain, and in a different universe, tomorrow would bring 0.1 inch, etc. etc. When she says 20 percent chance, she means in 20 percent of all possible scenarios for tomorrow, she expects to see rain.

Since tomorrow only occurs once, we have a problem: we cannot directly verify such an assertion. What to do?

***

We can indirectly verify this assertion. Do the following:

- On every day in which the forecast is given to be 20 percent, note the actual outcome (rain or no rain).

- Calculate the proportion of such days in which it actually rained.

- Do this also for 30-percent forecasts, 40-percent forecasts, etc. (in reality, you would establish ranges of probabilities rather than exact probabilities)

- If the actual proportion of rainy days mirrors the forecasted probabilities, then we have confidence that the forecasters are doing a good job.

Notice the sleight of hand. In the idealistic version, the base consists of all possible tomorrows, and each day-ahead forecast could be validated. In this realistic version, we abandon hope of validating each forecast and shift our attention to validating groups of forecasts.

Please leave a comment if you have seen this sort of validation for weather forecasts, or if you know of other ways to do validation.