Notes on vibe coding 4

Further adventures into the world of vibe coding

I wasn't expecting to be writing about vibe coding so soon again. But here I am.

Two blog posts ago (here), I felt quite satisfied that I have managed to migrate some blog posts from Typepad to Ghost using a piece of code written completely by AI (albeit with my steering). I haven't read the code itself.

As I geared up to move larger chunks of the blog over, I started to notice previously-unknown problems. These issues all necessitated updates to the code. In my vibe-coding experiment, I'd feed the anomalies back to the AI coder, and when it gets stuck, I'd steer it out of trouble.

It actually got stuck more often than I'd like it to. It seemed to perform better if it's given full reign to write on a blank slate but it is quite ineffective when the starting point is a functional program, and it is asked to make small tweaks to fix specific issues.

A small side track to think about the nature of bug fixing. Since the previous code is functional, an important objective is "if it ain't broke, don't fix it". Touch it lightly, to reduce the chance of creating even more problems. So, I cringe every time the reasoning steps include unsolicited "optimization": stuff like "I see that your current code for doing X is not efficient, and I am going to fix it by..." makes my stomach churn.

A particularly epilectic moment occurred when the AI coder decided to change the parameter of my main function from "force-tags" to "forced-tags." As a result, when I ran the corrected function with the previous set of parameters, it popped a syntax error. Why on earth - or in the multiverse - would it do that? (Ironically, when the original AI coder wrote "force-tags" instead of the more grammatical "forced-tags", I cringed but suppressed my urge to "fix" it, by which I mean, to break it.)

The first big problem I encountered was missing posts. As it turned out, those posts weren't actually missing; they were lost in the crowd, so to speak.

I'm going to migrate 19 years of posts in stages. That's because I'm pretty sure there are unknown problems that would pop up so I am starting with small batches; at some point, when I have sufficient confidence, I will move large chunks of posts all at once.

Step 1 is to use some criteria to extract a subset of posts to migrate over. Today, I selected about 200 posts. Step 2 is to find all the images on those 200 posts, rename those images using my indexing strategy (covered here), and upload these images to Ghost.

The heretofore satisfactory code failed to find all 200 blog posts. I was missing images from about 10 posts (after excluding those posts that did not contain images). That's very odd since when I look into the input files, I definitely see the 10 posts and the associated images, with their customized file names.

I will spare you this journey because I wasted a few hours while GPT5 came up with seemingly endless, useless ideas, after which it still had not a clue what was going on. It is one inexhaustible fount of throw-at-the-wall stuff. Nothing sticks.

During this slow crawl, I discovered that OpenAI sneakily switched my model to "GPT5 not thinking". I'm calling them out here. I was using GPT5 Thinking from the start. I suppose they didn't like the amount of work I was throwing at it recently, and decided to quietly unburden themselves. This, I believe, explains some of the incompetency I encountered today, versus previous work.

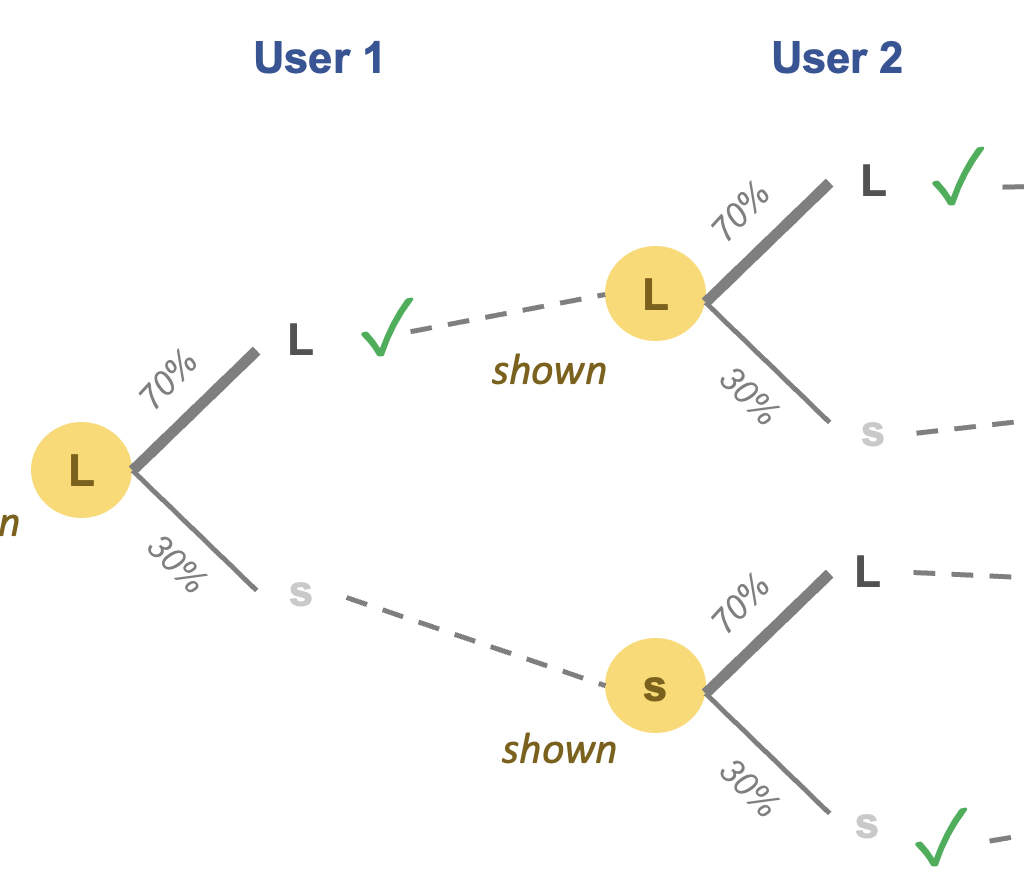

At this point, the AI coder and I had become a team. I was running diagnosis tests on the side. What I discovered: if I pulled those unmatched URLs from the error log, putting them in their own file, and ran the same code on this much smaller set of links, the AI code managed to find those 10 posts, and pull the images out.

This is terribly confusing. But that little test gave me life. I abandoned the effort to fix the code. I just divided the posts into two groups, and processed them successively. Problem solved.

In the meantime, the AI coder wasn't giving up. It threw out even more suggestions for further fixes. Any bets on whether those fixes would work?

For giggles, these were GPT5's famous last words before I jumped off the ship.

It "smelled" a rat here, but it smelled raccoon, hyena and Labubu before, none of which was sighted.

In the next post, I'll cover another unexpected problem.