How do you react to a probability forecast?

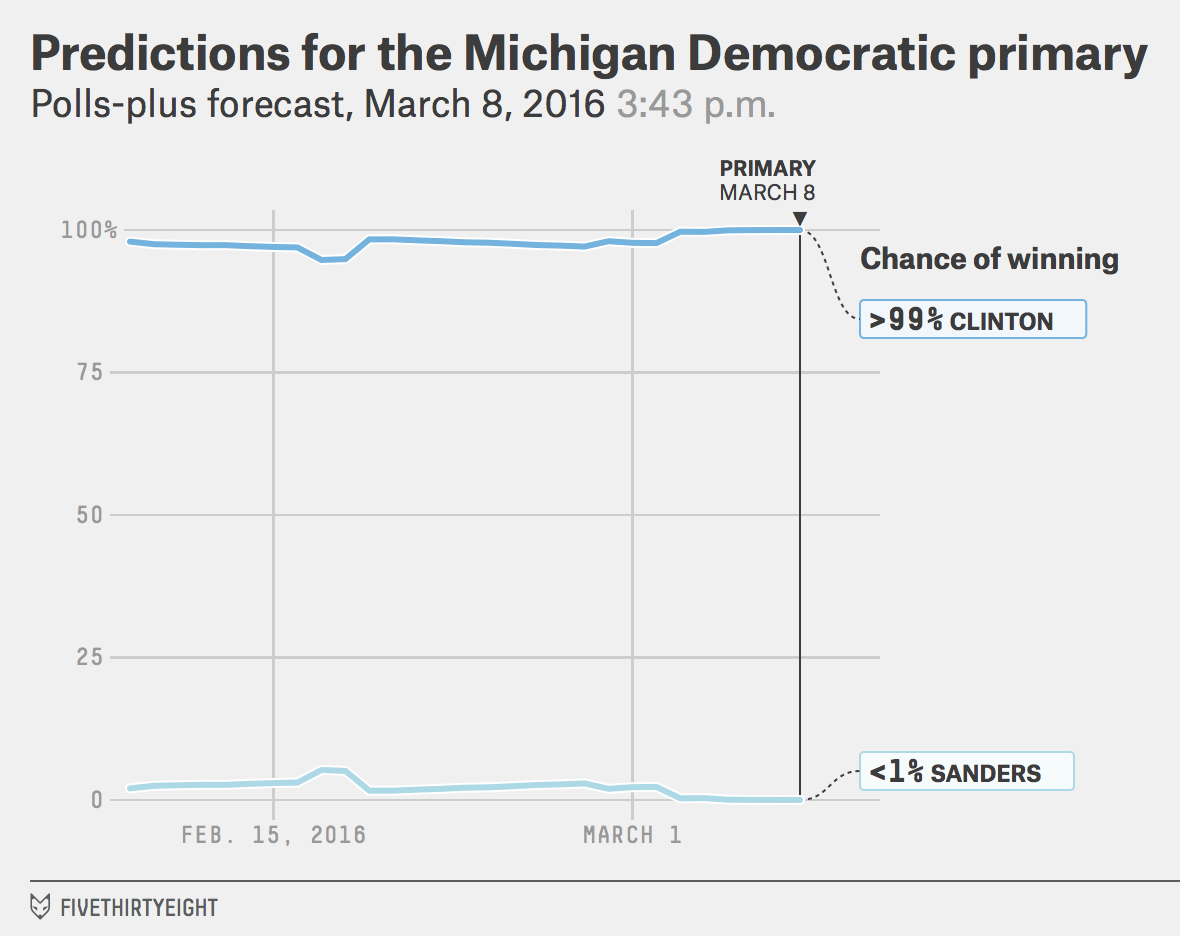

There is a healthy debate going on about last night's Michigan primary on the Democratic side, in which Bernie Sanders pulled off a major upset. Polls leading up to the primary put Hillary Clinton ahead by about 20 percent points. That would be a huge margin. Fivethirtyeight, which has been doing a stellar job covering the elections, uses polls heavily in generating forecasts, and they had predicted that Clinton would win with > 99% probability.

The trendy thing to do these days is to issue probability forecasts. I have found it frustrating to be on the receiving end of such forecasts.

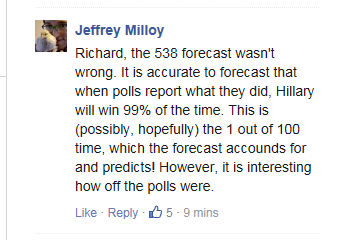

Some 538 readers took this opportunity to knock Nate Silver (plus almost every other forecaster) for getting Michigan completely wrong. But Nate has his supporters too. Here is a typical reaction:

From a purely technical perspective, this statement is correct. But the same statement would have been correct, had the forecast been 98%, 97%, ... , 1% !

In theory, on sufficient repeated observations, one can measure the accuracy of such forecasts. But presidential elections only occur once every four years. So there isn't enough data to do this.

I am not piling on 538. I do think they are the best in the business and I respect Nate's ability. But there is the issue of evaluating accuracy when it comes to probability forecasts. If someone says there is 60% chance something will happen, and it happens, is that accurate? if it doesn't happen, is that accurate?

The other reason given above for the miss is that one can't blame the model if the input data, in this case, the polling data, were bad. This again is technically correct. It is a version of garbage in, garbage out. But as someone who builds models, I must admit using this reason is a cop-out. (Just make a model that clings to the polling data.)