The failed coup against standardized testing

Why the SAT found its way back in the admissions offices

Standardized testing such as the SAT and ACT has attracted some relentless critics over the years. These adversaries argue that the tests are biased against minorities and the less well-to-do. Recently, many top U.S. colleges have been running an experiment, as they suspended the SAT/ACT requirement for admissions since Covid-19.

By the end of 2025, one school after another has backtracked and re-instated the standardized test requirement. MIT (2022, link), Yale (2024, link), Harvard (2024, link), Princeton (2025, link; Alma mater, why so late?), and Stanford (2025, link) have all changed their minds. Every school that reversed course pointed to data showing that the cohorts that have enrolled during the test-optional period are plainly unprepared for college.

In this Wall Street Journal editorial, they cited a dismal finding from the University of California (a top public university system):

About half of UC campus math chairs say that the “number of first-year students that are unable to start in college-level precalculus”—which used to be a standard course for California’s top high school sophomores—doubled over the last five years.

Wait, how about the other half of the campuses?

The other half of chairs said the number tripled.

Harvard started offering a remedial high-school math course (link) to incoming first-year students to help them catch up. University of California, San Diego launched its remedial course 10 years ago, and recently saw enrollment jump 10 times (link).

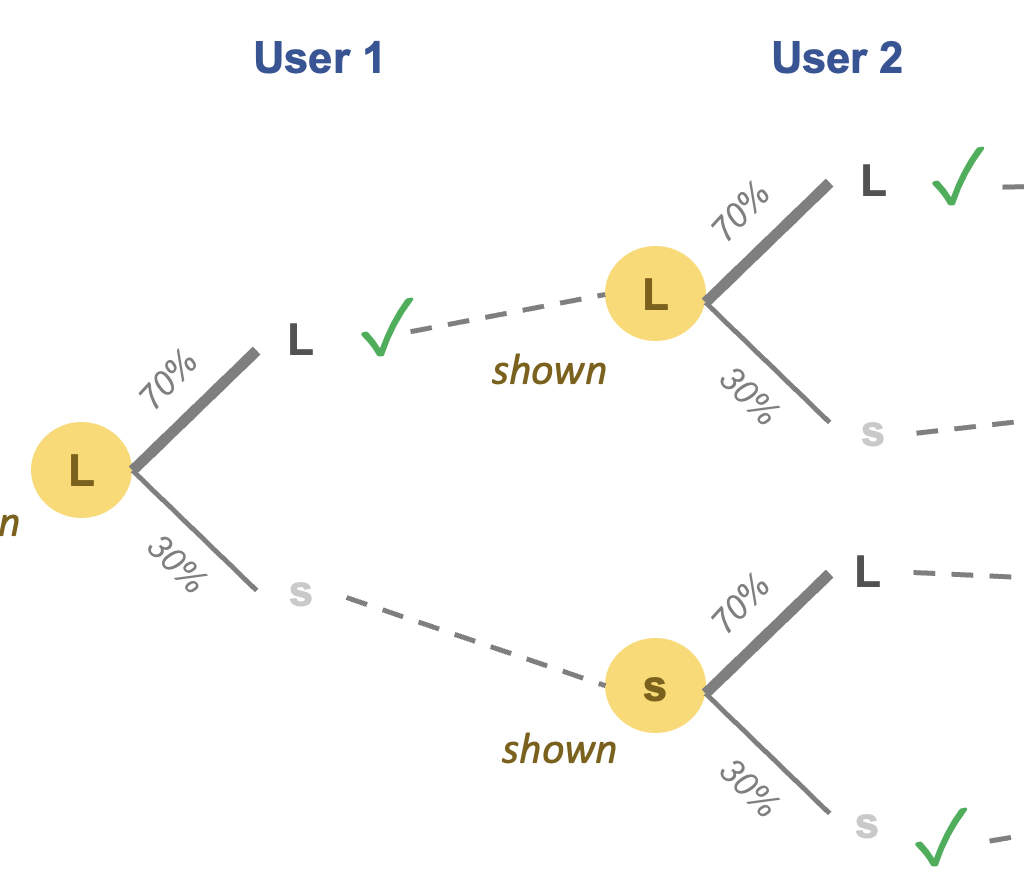

The move to drop standardized testing was always going to be a disaster. As someone who has read applications (for graduate schools), it's clear that test scores represent the only item in an applicant's file that is interpretable.

High school GPAs are meaningless because the admissions officer lacks any context to interpret the data. Like college professors, high school teachers are giving away top grades like Halloween candy.

Transcripts present the same problem with GPAs – no context. There is never enough time to read course titles to infer what level they are at, and certainly no point staring at individual grades, having no idea what proportion of the class received the same grades.

Teacher references are not much better. Most references are vapidly nice, without distinguishing one student from another.

Once in a blue moon, you come across a teacher slamming a student. My first reaction is: why did the teacher bother to write it? My second reaction is: Poor student, who mistakenly assumed that s/he was on good terms with said teacher. My third reaction is: what a mess! Two vapidly nice ref + one viciously cruel ref = ?? The truth is I know neither the student nor the reference writers, and I don't feel like choosing who to believe.

Essays are somewhat informative but the prevalence of hired help, coupled with the availability of AI writers, ensure that many essays do not say much real about the applicants. It's also a medium that favors those with better writing, and story-telling skills. Besides, this medium favors the well-to-do, who can afford more expensive coaches, and send their kids to far-away places for save-the-world type experiences. (Somehow, those critics who like to bash standarized test biases are quiet about obvious biases of other forms of assessment, such as essays.)

Without the standardized test scores, the application portfolio contains only subjective items. Picking one applicant over another is an act of randomness. No wonder the colleges admitted under-prepared students during the test-optional period.